Crawling the site data is quite useful for automating certain tasks performed frequently on web pages. Alternatively you can write a crawler to interact with the site like a human. The following article Emergenceingames.com will guide you how to crawl website data with Selenium.

Automating Google Chrome involves using the tool Selenium, is a software component that sits between your program and your browser. Here’s how to crawl a website using Selenium to automate Google Chrome.

How to crawl a website using Selenium

Setting up Selenium

Web Driver

As mentioned above, Selenium consists of a software component that runs as a separate process, and performs actions on behalf of java programs. This component is called Web Driver and must be downloaded to your computer.

Go here to get the latest version of Selenium, suitable for your computer’s operating system (Windows, Linux or macOS). Extract the ZIP file in a suitable location, such as C:WebDriverschromedriver.exe. You will have to use this location in your java program.

Java Modules

The next step is to set up the necessary java modules to use Selenium. Assuming you are using Maven to build a java program, add the dependencies to the POM.xml:

dependencies >

dependency>

groupId>org.seleniumhq.selenium/groupId >

artifactId>selenium-java/artifactId >

version >3.8.1/version >

/dependencies >

To run processes, all required modules must be downloaded and installed on your computer.

First set up Selenium

Get started with Selenium. The first step is to create a ChromeDriver:

WebDriver driver = new ChromeDriver();

A new Google Chrome window will appear on the screen. Navigate to the Google search page:

driver.get(“http://www.google.com”);

References to text inputs to perform searches. Text element named q. Position HTML elements on the page using the method (method) WebDriver.findElement().

WebElement element = driver.findElement(By.name(“q”));

You can send text to any other element using the . method sendKeys(). Try submitting with a search term and ending with a new line to start searching immediately:

element.sendKeys(“terminatorn”);

Now the search is running, you just need to wait for the results page. To do this thing:

new WebDriverWait(driver, 10)

.until(d -> d.getTitle().toLowerCase().startsWith(“terminator”));

Basically the above code tells Selenium to wait 10 seconds and return when the page title starts with terminator. Use a lambda function to define a wait condition.

You now have the page title.

System.out.println(“Title: ” + driver.getTitle());

Once done with the session, close the browser window:

driver.quit();

Folks is a simple browser session controlled using java via Selenium. Although it is quite simple, it allows you to program a lot of things that you would normally have to do by hand.

Using Google Chrome Inspector

Google Chrome Inspector is an invaluable tool for identifying elements used with Selenium. This tool allows you to target precise elements from java to extract information as well as to perform interactive actions like clicking buttons. Here’s how to use the Inspector.

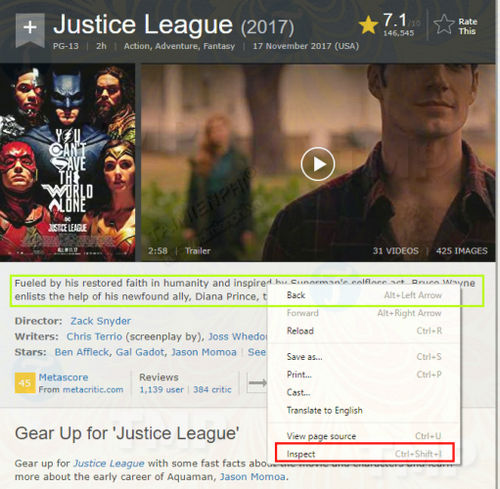

Open Google Chrome and navigate to a new page, say the Justice League (2017) movie rating IMDB page.

Right-click the trailer summary, select Inspect on the menu.

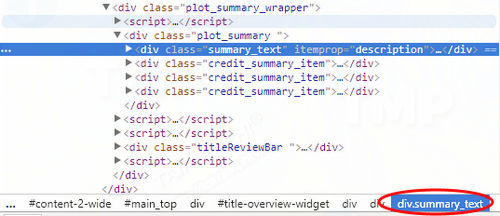

Are from Elements tabyou can see the summary text as a div with class summary_text.

Use CSS or XPath

Selenium supports selecting elements from the page using CSS. (The CSS supported dialect is CSS2). For example, to select the summary text from the IMDB page above, you would write:

WebElement summaryEl = driver.findElement(By.cssSelector(“div.summary_text”));

You can also use Xpath to select elements in the same way. To select summary text:

WebElement summaryEl = driver.findElement(By.xpath(“//div[@class=”summary_text”]”));

XPath and CSS have similar features, so you can choose between the two to use.

Read Google Mail from Java

Here’s a more complex example: fetching Google Mail.

Start Chrome Driver, navigate to gmail.com and wait until the page loads:

WebDriver driver = new ChromeDriver();

driver.get(“https://gmail.com”);

new WebDriverWait(driver, 10)

.until(d -> d.getTitle().toLowerCase().startsWith(“gmail”));

Next find the email frame (the name is given by the identification ID) and enter the email address. Click the button next and wait for the password entry page to load.

/* Type in username/email */

{

driver.findElement(By.cssSelector(“#identifierId”)).sendKeys(email);

driver.findElement(By.cssSelector(“.RveJvd”)).click();

}

new WebDriverWait(driver, 10)

.until(d -> ! d.findElements(By.xpath(“//div .)[@id=’password’]”)).isEmpty() );

Now, we enter the password, click the Next button again and wait for the Gmail page to load.

/* Type in password */

{

driver

.findElement(By.xpath(“//div[@id=’password’]//input[@type=”password”]”))

.sendKeys(password);

driver.findElement(By.cssSelector(“.RveJvd”)).click();

}

new WebDriverWait(driver, 10)

.until(d -> ! d.findElements(By.xpath(“//div .)[@class=”Cp”]”)).isEmpty() );

Fetch a list of email rows and iterate over each item.

List

.findElements(By.xpath(“//div[@class=”Cp”]//table/tbody/tr”));

for (WebElement tr : rows) {

}

For each entry, fetch From . frame. Note that some From items can have multiple elements, depending on the number of people in the conversation.

{

/* From Element */

System.out.println(“From: “);

for (WebElement e : tr

.findElements(By.xpath(“.//div[@class=”yW”]/*”))) {

System.out.println(” ” +

e.getAttribute(“email”) + “, ” +

e.getAttribute(“name”) + “, ” +

e.getText());

}

}

Next fetch the object.

{

/* Subject */

System.out.println(“Sub: ” + tr.findElement(By.xpath(“.//div)[@class=”y6″]”)).getText());

}

And the date and time of the message.

{

/* Date/Time */

WebElement dt = tr.findElement(By.xpath(“./td[8]/*”));

System.out.println(“Date: ” + dt.getAttribute(“title”) + “, ” +

dt.getText());

}

Below are the total number of email rows on the page.

System.out.println(rows.size() + ” mails.”);

Once done, close the browser window.

driver.quit();

https://thuthuat.Emergenceingames.com/cach-crawl-du-lieu-trang-web-bang-selenium-30079n.aspx

Above is how to crawl website data using Selenium with Google Chrome. With Google Chrome Inspector, you can easily find the CSS or XPath to extract or interact with the element.

Related keywords:

crawl website with Selenium

use Selenium to crawl, automate Google Chrome,

Source link: How to crawl a website using Selenium

– Emergenceingames.com